Introduction

The original blog and website can be found at gaussmath.ai.

Large Language Models (LLMs) have made remarkable progress in mathematics — spanning the full range from K–12 curriculum problems to Olympiad-level challenges, and even to research-level problems.

But existing benchmarks don’t tell the whole story. They mainly measure final accuracy — whether the model arrived at the right answer. What they miss is how the model got there. Did it recall the correct theorem? Apply a systematic strategy? Use intuition to check plausibility? Or did it simply memorize a pattern?

To push beyond these limits, we built GAUSS (General Assessment of Underlying Structured Skills in Mathematics). Developed at Hyperbolic Labs, GAUSS is a skill-based benchmark that evaluates not just outcomes, but the cognitive abilities underlying mathematical reasoning.

Why GAUSS?

1. Low Skill Resolution

Traditional benchmarks have low skill resolution — they only report whether the final answer is correct. But solving a math problem usually involves multiple skills: recalling knowledge, performing symbolic computations, constructing proofs, or generalizing across domains.

GAUSS disentangles these skills, tagging each problem with the exact abilities it requires. This allows researchers to see a model’s skill profiles, not just a single score.

2. Saturation

Many widely used datasets (e.g. GSM8K, MATH) are already saturated — top models achieve near-perfect scores, leaving little room to measure progress. GAUSS addresses this with fresh, carefully curated problems from Olympiads, graduate coursework, and research-level sources.

3. Contamination

Existing benchmarks often contain problems already seen in training data, inflating results. GAUSS minimizes contamination by drawing from diverse and novel sources, ensuring that evaluation reflects math abilities, not memorization.

The GAUSS Framework

GAUSS organizes mathematical ability into three domains and twelve skills:

Mathematical Knowledge and Understanding

Memory of Math Knowledge

Understanding of Knowledge and Theories

Computational and Analytical Skills

Problem Solving and Communication

Problem-Solving Framework

Logical Thinking and Reasoning

Writing and Presentation

Learning, Meta Skills, and Creativity

Learning New Knowledge

Intuition

Meta Skills

Mathematical Modeling

Generalization

Creativity

This structure provides a comprehensive breakdown of mathematical cognition, from foundational recall to creative problem posing.

Sample Problem and Evaluation Pipeline

Problem

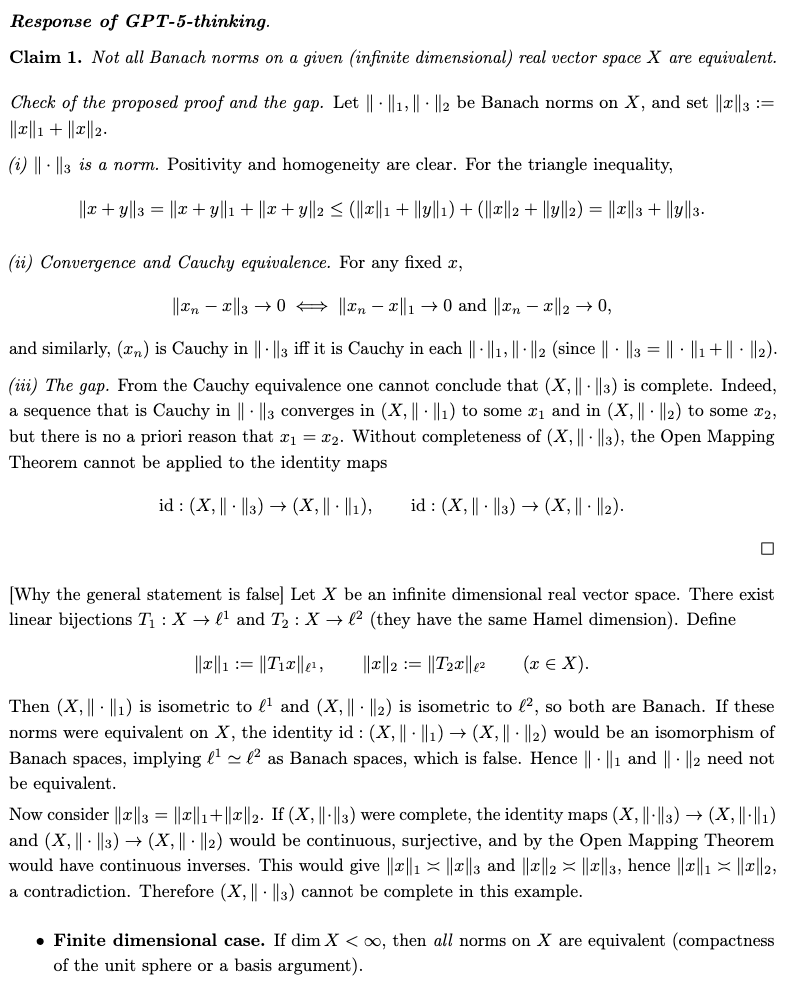

GPT-5 Thinking Response

Standard Solution

Rubric

Score and Evaluation

What GAUSS Reveals

Applied to GPT-5 Thinking, GAUSS highlights a highly uneven skill profile:

Strengths: subject taxonomy, evaluating flawed arguments, plausibility checks, reading and summarizing advanced material, and posing new problems.

Weaknesses: understanding and applying theorems (0%), symbolic computation (0%), structured problem-solving strategies (0%), geometric intuition (0%), mathematical modeling (0%) and generalization (17%).

Compared to o4-mini-high, GPT-5 thinking shows clear gains in taxonomy, argument evaluation, plausibility check, and reading, yet its core theoretical understanding remains fragile.

Strengths of GPT-5 Thinking

Taxonomy of Knowledge:

GPT-5 Thinking is much stronger at identifying the correct mathematical domain of a problem. This makes it reliable for automated tagging and classification.

Evaluating Arguments:

It shows clear gains in checking whether reasoning is valid. The model spots missing assumptions, invalid inductive steps, or hidden gaps that GPT-o4-mini-high often overlooked. This makes it useful as a logical consistency checker in auto-evaluation.

Plausibility Check:

Shows progress in using heuristic shortcuts—for example, performing a plausibility check—to narrow down candidate answers before committing to a formal solution.

Reading New Material:

Demonstrates strong ability to parse unseen definitions, new notations, and multi-page contexts; this also supports literature summarization and rapid knowledge assimilation.

Posing Problems:

GPT-5 shows strong ability to pose new problems after reading or summarizing material. Instead of only consuming definitions and theorems, it can generate follow-up questions, exercises. This capability could be leveraged as an automatic prompting tool, helping guide learners and researchers.

Weaknesses of GPT-5 Thinking

Despite these strengths, GPT-5 Thinking performs poorly in several foundational areas:

Understanding of knowledge and theories (0%):

struggles to comprehend and apply theorems, even when directly relevant.

Computational and analytical skills (0%):

fails at symbolic manipulation and step-by-step computations.

Application of problem-solving strategies (0%):

lacks systematic use of standard frameworks such as induction, contradiction, or constructive methods.

Geometric Intuition (0%):

fails to recognize or leverage spatial structures, visual heuristics, or geometric reformulations of problems.

Generalization (17%):

weak at extending solutions beyond the immediate case, indicating limited abstraction ability.

Looking Ahead

We plan to release a comprehensive GAUSS system that combines new features with a structured development pipeline:

Problem and dataset curation: define standardized problem formats and rubrics for each GAUSS skill category, and curate problem sets with canonical solutions to build a reliable evaluation corpus.

Radar-style skill charts to visualize strengths and weaknesses of LLMs across the GAUSS skill breakdown.

Community crowdsourcing to expand coverage across mathematical domains and enrich the benchmark.

AI evaluation system: design and implement an AI evaluator that can automatically verify the correctness and quality of model responses.

Math AI Model Training: experimenting with the GAUSS dataset and AI evaluator to probe and develop next-generation math AI models.

Adversarial/cooperative training loop: integrate problem generation, problem solving, and evaluation into a tri-party adversarial/cooperative pipeline (e.g., GAN, GAIL style), where AI systems can co-evolve as generators, solvers, and evaluators.

Conclusion

Ultimately, GAUSS goes beyond a benchmark. It marks a methodological shift — from asking “Did the model solve the problem?” to “What are the model’s strengths and weaknesses by skill?”

By exposing both strengths and brittle edges, GAUSS provides a roadmap for building the next generation of AI systems — systems that go beyond producing answers to demonstrate real reasoning, learning, and discovery.

About Authors

Authors:

Yue Zhang¹*, Jiaxin Zhang¹²*, Qiuyu Ren³, Tahsin Saffat³, Xiaoxuan Liu³, Zitong Yang⁴, Banghua Zhu⁵⁶, Yi Ma³⁷

Equal Contribution

Affiliations:

¹ Hyperbolic Labs ² California Institute of Technology ³ University of California, Berkeley ⁴ Stanford University ⁵ Nvidia ⁶ University of Washington ⁷ University of Hong Kong

About Hyperbolic

Hyperbolic is the on-demand AI cloud made for developers. We provide fast, affordable access to compute, inference, and AI services. Over 195,000 developers use Hyperbolic to train, fine-tune, and deploy models at scale.

Our platform has quickly become a favorite among AI researchers, including those like Andrej Karpathy. We collaborate with teams at Hugging Face, Vercel, Quora, Chatbot Arena, LMSYS, OpenRouter, Black Forest Labs, Stanford, Berkeley, and beyond.

Founded by AI researchers from UC Berkeley and the University of Washington, Hyperbolic is built for the next wave of AI innovation—open, accessible, and developer-first.

Website | X | Discord | LinkedIn | YouTube | GitHub | Documentation

:format(webp))

:format(webp))

:format(webp))

:format(webp))

:format(webp))

:format(webp))

:format(webp))

:format(webp))